Indextron vs. neural networks: instant learning for pattern recognition was created in Russia

Indextron vs. neural networks: instant learning for pattern recognition was created in Russia

The Indextron algorithm learns object images at a first glance, which is quite unlike artificial neural nets that may need many iterations of proper training. Computational experiments at the V. A. Trapeznikov Institute of Control Problems (IPU RAS) confirmed that such a short “instruction” is sufficient for Indextron to successfully recognize objects regardless of a view angle and even certain deformations.

Alexei Mikhailov, PhD, principal researcher, laboratory of "Technical Diagnostics and Fault Tolerance" published his seminal paper back in 1998. Indextron is a Russian invention that is gradually gaining attention in machine learning community.

Since then, the indextron has been tested in a variety of applications: it has analyzed gamma-ray telescope signals, classified vegetation from satellite images, it made possible an image-based navigation of the lunar surface and real useful life prediction of jet engines. It has also found use in fingerprint identification, trademark recognition, and even marked students’ essays. Now, specialists from the IPU RAS have tested it in solving the most difficult task - recognizing objects at different view angles and different distances against an arbitrary background. Automatic recognition of 3D- objects on 2D-images is a difficult task that is being solved today with the help of artificial neural networks. Although artificial neural nets imitate operations of brain neurons, the nets are no match to human mind in terms of efficiency and speed of pattern recognition. It takes artificial neural networks a certain training to tell a flower from a cat. However, human beings instantaneously see the difference. And so does the indextron.

“The main problem associated with artificial neural networks is the problem of learning,” says Alexei Mikhailov. “For learning, that is, adapting neural networks to different applications, it is required to find a large number of coefficients with which the network is tuned to a particular task, and finding these coefficients requires a large amount of calculations.” This problem, according to the scientist, is exacerbated by a need to engineer features that are needed for object recognition. Previously, this was done “manually” by trial and error, that is, engineers themselves had to invent features suitable for specific objects, for instance, the length of the ears, stripes on the coat, the distance between the eyes, etc. Then the neural network should be tuned by adjusting its coefficients for this particular recognition task. After that, should recognition still fail, different features should be tried, and such a selection can go on for quite a time. Because of this inconvenience, some 15 years ago, a so-called deep learning emerged, which uses many more layers of neurons – some tens or hundreds or even more, so that it is possible to work with "raw" features - for example, with pixels of depicted objects.

“With deep machine learning, you don’t have to invent features,” says Alexei Mikhailov. - The algorithm itself generates features based on "raw" data. Deep learning is very popular nowadays, but it solves the problem of feature engineering by employing many more coefficients. After all, each layer means new coefficients.”

The algorithm proposed by Russian scientists also works with “raw” features in the form of pixels, but at the same time there is no need to calculate any coefficients, and sometimes even a pair of photographs is all it takes to complete a training procedure. On the other hand, training a neural network requires a huge amount of data, so that neural nets must analyze lots of photographs of objects in order to learn to recognize them.

“The proposed algorithm is an indexing classifier, called indextron for short,” says the scientist. - It uses similar principles of operation as the Google search engine. Beside, its simplicity makes it feasible to implement the algorithm on FPGA-chip.”

The pedigree of this invention is interesting. If neural networks originate from the Rosenblatt perceptron (a cybernetic model of the brain implemented in 1960 in the form of the world's first neurocomputer "Mark-1" by the American scientist Frank Rosenblatt), then the indextron and its close relative the Google search engine originate from the back-of-the-book index, where, by keywords, it is possible to instantly find pages where they occur.

Index search in Google is carried out by working with reverse documents. A document is a set of words, and a reverse document is a list of documents’ IDs that contain the keyword that you type in the search box, after which Google almost instantly provides links to all pre-indexed documents that contain the word or several words from the search query. “Indextron works similarly, but, unlike search engines that take in textual data, indextron works with noisy numerical data,” explains Alexei Mikhailov.

Any image can be considered a document consisting not of words that form sentences, but of graphic elements that form an image. A word is made up of letters, and a graphic image is made up of pixels. Each pixel can be represented digitally, where each of its colors - red, green and blue - has 256 brightness levels. Thus, each pixel is translated into three numbers, which are further processed by the indextron.

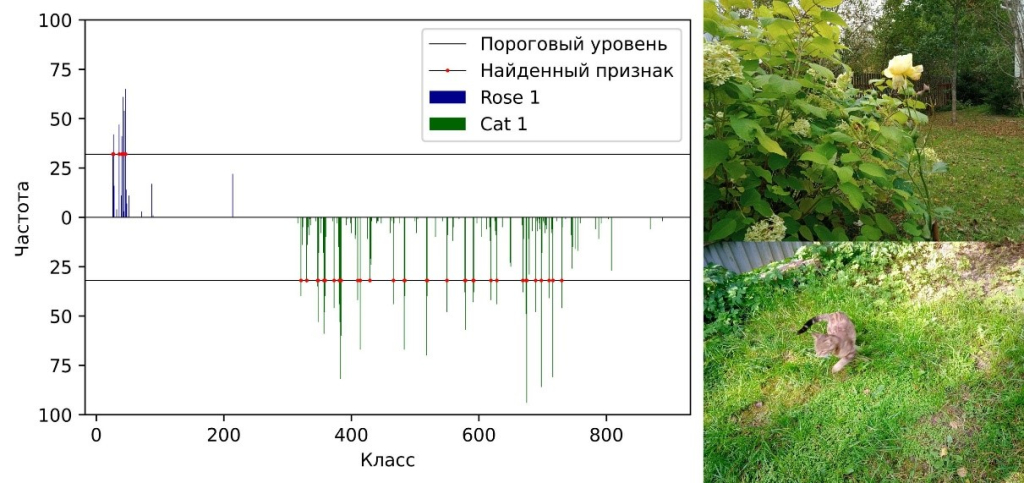

Figure. 1. Right-hand side of the picture presents two photographs showing the rose and cat objects that were used for training. The algorithm classifies pixels by their red, green, and blue components into pixel classes that characterize these objects. The class occurrence frequencies (left-hand side of the picture) serve as a decision making tool for recognition. This class histogram shows strikingly different distribution of class frequencies for these two objects.

In other words, the indextron works as follows. The image of a cat is characterized by a set of pixels, just as the word "cat" is characterized by a set of letters. The indextron can select the set of these pixels and convert it into a set of numbers. Learning happens almost instantly. And if the same characteristic set of numbers is found in another photograph, then the indextron recognizes a cat in it.

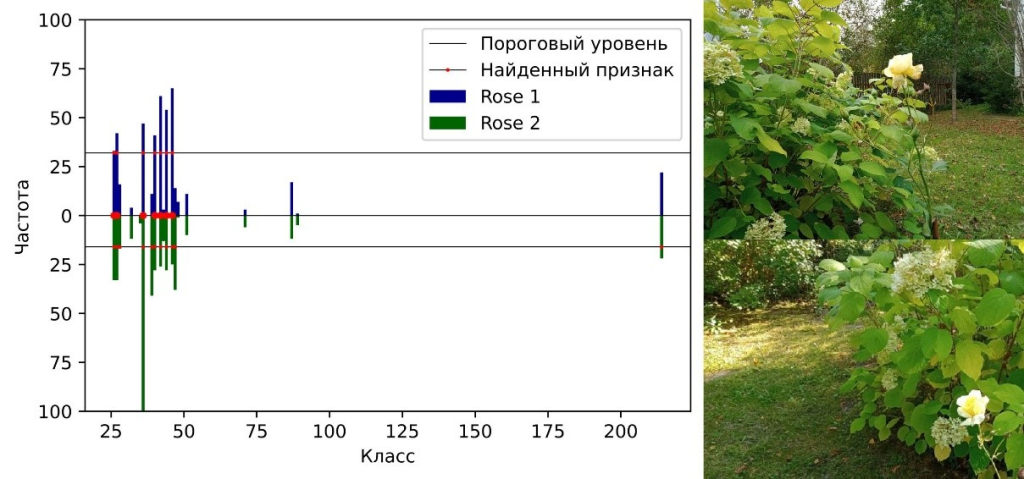

Figure 2. Comparison of "digital signatures" of rose objects on images taken from different view angles. The number of common features is 7.

The Google search engine does not think about the meaning of the word "cat", it simply indexes the documents containing it. But the indextron does not index images, it indexes objects found in images. Then, it does not matter what photograph will be shown to it. If the image contains a cat, this object will be recognized. In this way the search engine becomes a pattern recognition engine.

“The human brain consists of rational and emotional parts,” says Alexei Mikhailov.- “Intelligence is in the cerebral cortex, and emotions are in the brain stem that is located at brain’s center. But, unlike the computer, the brain does not calculate. There is an assumption that it is the indexing of images, and not calculations, that underlies biological recognition systems, and the cerebral cortex is an indexing system in which patterns are described by combinations of feature addresses. This explains the speed of the brain in the tasks of image recognition even though the brain’s neurons operate at frequencies below 500 Hz, while the clock frequency of computers reaches billions of hertz, that is, several GHz. Anyway, the brain, if emotionally aroused, can learn a stranger at a first glance, so that it can recognize it later on.

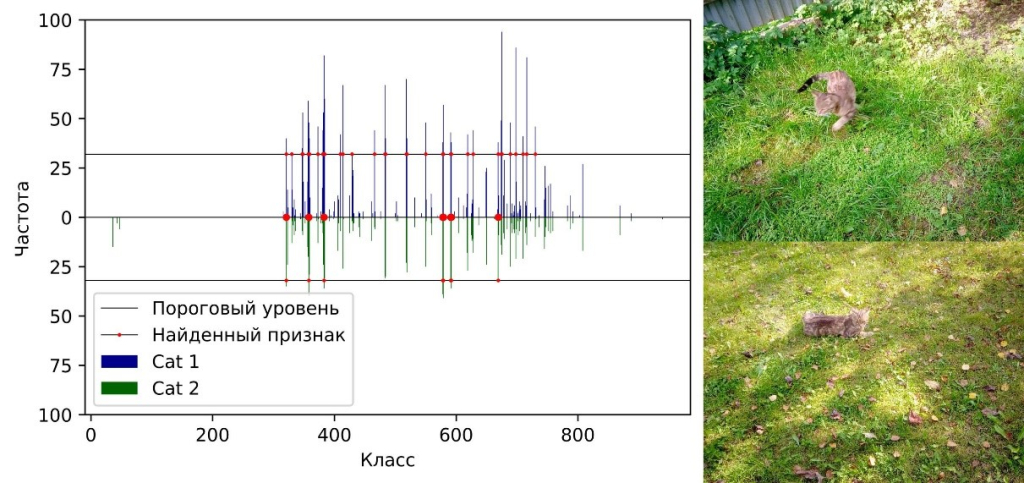

Figure. 3. Comparison of "digital signatures" of cat object in different photographs. The number of common features is 6. The object is recognized despite a different view angle, body position, and a tucked tail.

A four-layer neural network and the indextron were trained to recognize two different objects (a flower and a cat). It took 900 seconds to train a four-layer neural network to recognize a cat and a rose in an image. But, it took only 16 s to train the indexron. The neural network hardware comprised AMD Ryzen 5 3600 processor and Nvidia GeForce GTX 1600 Super graphics card. At the same time, the indextron did not use any graphics cards. As a result, the neural network showed an object identification accuracy of 85.3%, and indextron - 82.87%.

Figure 4. The capabilities of the indextron were also tested when recognizing objects such as cars. The left-hand side shows a photograph that was used for training. The right-hand side shows a test photo. The car on the right-hand side was recognized despite a completely different view angle.

The results of the experiment suggest that a common characteristic of both indextron and deep learning neural nets is the automatic feature engineering, which significantly reduces the complexity of designing image recognition systems. At the same time, the indextron outperforms deep learning systems in terms of training time.

Another advantage of the indextron is the simplicity of its practical application. The time and energy costs required to train recognition systems based on neural networks in solving large problems result in the need of cloud technologies and high-performance machine stations. In the case of indextron, its simplicity allows algorithm to be implemented on a laptop or on a programmable chip (FPGA). The hardware implementation of the indextron makes it possible to use it for various autonomous devices where instant response is required, including instant learning when new situations arise.

For more details, see the article "Instant learning in pattern recognition” ISSN 0005-1179, Automation and Remote Control, 2022, Vol. 83, No. 3, pp. 417–425. © Pleiades Publishing, Ltd., 2022. The Russian version was published in Avtomatika i Telemekhanika, 2022, No. 3, pp. 144–155, No. 3, 2022.

Prepared by Leonid Sitnik, editorial board of the Russian Academy of Sciences website. Photo by the authors of the study and Pixabay/GDJ